Tuesday, December 24, 2019

Tuesday, December 3, 2019

Sunday, November 10, 2019

Tuesday, September 10, 2019

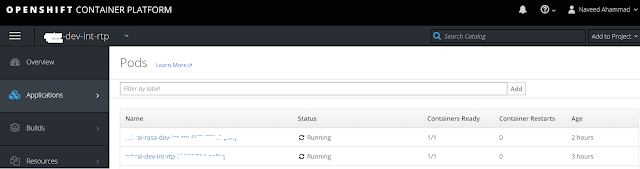

Rasa RESTful API calls

One of my requirement is Parse a message with the trained RASA nlu model.

1) Train a Rasa model using rasa train

2) Start a Rasa server with rasa run --enable-api

3) Parse a message by running

curl -X POST http://52d9691d.ngrok.io/model/parse -H 'Content-Type: application/json' -d '{"text":"Your text"}'

Used ngrok and Postman

1) Train a Rasa model using rasa train

2) Start a Rasa server with rasa run --enable-api

3) Parse a message by running

curl -X POST http://52d9691d.ngrok.io/model/parse -H 'Content-Type: application/json' -d '{"text":"Your text"}'

Used ngrok and Postman

Friday, August 30, 2019

Wednesday, August 21, 2019

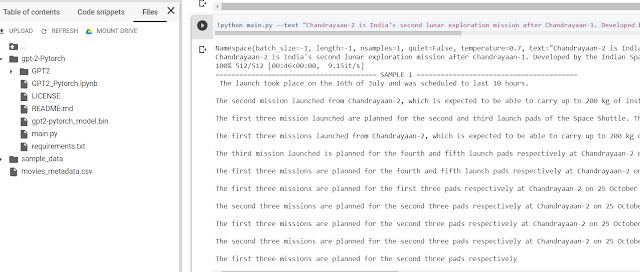

Generate Text using OpenAIGPT2 in Python

!git clone https://github.com/graykode/gpt-2-Pytorch

import os

os.chdir('gpt-2-Pytorch')

!curl --output gpt2-pytorch_model.bin https://s3.amazonaws.com/models.huggingface.co/bert/gpt2-pytorch_model.bin

!pip install -r requirements.txt

!python main.py --text "Chandrayaan-2 is India's second lunar exploration mission after Chandrayaan-1. Developed by the Indian Space Research Organisation, the mission was launched from the second launch pad at Satish Dhawan Space Centre on 22 July 2019 at 2.43 PM IST to the Moon by a Geosynchronous Satellite Launch Vehicle Mark III."

import os

os.chdir('gpt-2-Pytorch')

!curl --output gpt2-pytorch_model.bin https://s3.amazonaws.com/models.huggingface.co/bert/gpt2-pytorch_model.bin

!pip install -r requirements.txt

!python main.py --text "Chandrayaan-2 is India's second lunar exploration mission after Chandrayaan-1. Developed by the Indian Space Research Organisation, the mission was launched from the second launch pad at Satish Dhawan Space Centre on 22 July 2019 at 2.43 PM IST to the Moon by a Geosynchronous Satellite Launch Vehicle Mark III."

Free GPU, TPU on Google Colab

Happy learning and experiments with Google Colab

Note: Data might be lost after 12hrs

We can run the tensorboard on colab too.

Reference links

Google SEEDBANK

https://research.google.com/seedbank/seeds?keyword=text

https://colab.research.google.com/

Note: Data might be lost after 12hrs

We can run the tensorboard on colab too.

Reference links

Google SEEDBANK

https://research.google.com/seedbank/seeds?keyword=text

https://colab.research.google.com/

Sunday, August 18, 2019

Question-Answering system with cdQA-suite

This is Transfer Learning era on NLP tasks.

Have been working on how to use my data set on XLNet and BERT.

Found excellent references with community help

https://towardsdatascience.com/how-to-create-your-own-question-answering-system-easily-with-python-2ef8abc8eb5

https://github.com/cdqa-suite/cdQA

Have been working on how to use my data set on XLNet and BERT.

Found excellent references with community help

https://towardsdatascience.com/how-to-create-your-own-question-answering-system-easily-with-python-2ef8abc8eb5

https://github.com/cdqa-suite/cdQA

XLNet - A SOTA model

While working on the Q&A system, have found pretrained model on NLP. XLNET is state of the art model for Q&A applications.

XLNet is the combination of Autoregressive and autoencoding

References:

https://arxiv.org/abs/1906.08237

https://mlexplained.com/2019/06/30/paper-dissected-xlnet-generalized-autoregressive-pretraining-for-language-understanding-explained/

XLNet is the combination of Autoregressive and autoencoding

Generalized Autoregressive Pretraining for Language Understanding.

Generalized -- Pretrain without data correption( masking) by using

permutation LM.

Auto regressive – Autoregressive language model but also utilizes bidirectional

context

This is better than BERT

https://arxiv.org/abs/1906.08237

https://mlexplained.com/2019/06/30/paper-dissected-xlnet-generalized-autoregressive-pretraining-for-language-understanding-explained/

Friday, May 3, 2019

Thursday, April 25, 2019

12 Greatest Success Tips in Life

- SKETCH THE OPPORTUNITIES

- SETTING THE GOAL

- STUDY PLANNING

- SELF CONFIDENCE

- SELF ESTEEM

- SUCCESS STORIES

- STUDY TIME

- STUDY ENVIRONMENT

- STUDY NOTES

- STRATEGIES

- STRESS MANAGEMENT

- SOCIAL RESPONSIBILITY

Thursday, April 18, 2019

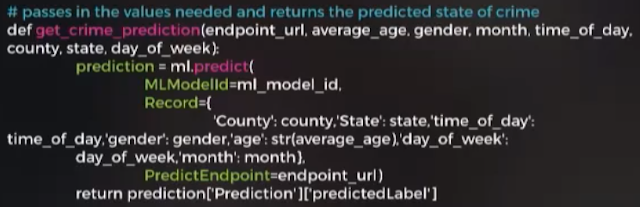

Journey with AWS SageMaker

SageMaker is a fully managed machine learning service offered by AWS.Build, train and deploy machine learning models on the AWS cloud.

As part of ML inference at Edge demo first have prepared my ML model using AWS SageMaker.

Have faced few interesting challenges like policy issues and instances availability etc

References:

https://aws.amazon.com/sagemaker/pricing/

https://console.aws.amazon.com/support/home

As part of ML inference at Edge demo first have prepared my ML model using AWS SageMaker.

Have faced few interesting challenges like policy issues and instances availability etc

References:

https://aws.amazon.com/sagemaker/pricing/

https://console.aws.amazon.com/support/home

Monday, April 15, 2019

AWS IOT Greengrass ML inference

Right now am working one of the use case using AWS Greengrass.

https://aws.amazon.com/greengrass/faqs/

AWS Greengrass is a service that allows you to take a lot of

the capabilities provided by the AWS IoT service and run that at the edge

closer to your devices. AWS Greengrass ensures your IoT devices can respond

quickly to local events, use Lambda functions running on Greengrass Core to interact with local resources, operate with irregular

connections, stay updated with over the air updates, and minimize the cost of

transmitting IoT data to the cloud.

Deep Learning

challenges at the Edge

Resource-constrained devices

CPU, memory, storage, power

consumption.

Network connectivity

Latency, bandwidth,

availability.

On-device prediction may be

the only option.

Deployment

Updating code and models on a

fleet of devices is not easy.

Value of ML

inference at the Edge

·

Latency

·

Bandwidth

·

Availability

·

Privacy

https://aws.amazon.com/solutions/case-studies/iot/https://aws.amazon.com/greengrass/faqs/

Monday, April 8, 2019

Friday, April 5, 2019

Edge Computing

As a Automotive industry Machine Learning Engineer, today i thrilled about Edge Computing technology.

Edge computing to be bigger than cloud computing

Edge computing brings memory and computing power closer to the location where it is needed.

As part of my R&D have been deploying my tiny TF model on Android Apps and Embedded devices

More exciting to know Edge computing technology

https://www.sparkfun.com/products/15170

Edge computing to be bigger than cloud computing

Edge computing brings memory and computing power closer to the location where it is needed.

As part of my R&D have been deploying my tiny TF model on Android Apps and Embedded devices

More exciting to know Edge computing technology

https://www.sparkfun.com/products/15170

Monday, April 1, 2019

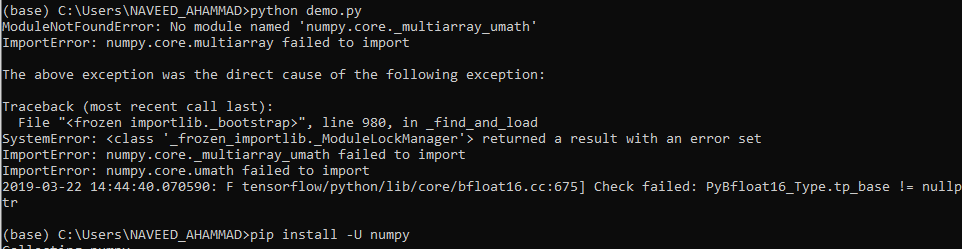

Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX

Am running some model on my brand new 32bit RAM laptop and faced the below error msg

Soulution: os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

Tuesday, March 26, 2019

Tensorflow Lite Demo

As part of my requirements, have started deploying tensorflow models on Android using Tensorflow Lite

Amazing experience

import tensorflow as tf

# Convert to TensorFlow Lite model.

converter = tf.lite.TFLiteConverter.from_keras_model_file('sunroof_model.h5')

tflite_model = converter.convert()

open("sunroof_model.tflite", "wb").write(tflite_model)

Below are few errors have experienced while developing android code

"Attempt to invoke virtual method 'void org.tensorflow.lite.Interpreter.run(java.lang.Object, java.lang.Object)' on a null object reference"

Cannot copy between a TensorFlowLite tensor with shape [1, 1] and a Java object with shape [1].

Cannot copy between a TensorFlowLite tensor with shape [1, 6] and a Java object with shape [6].

Amazing experience

import tensorflow as tf

# Convert to TensorFlow Lite model.

converter = tf.lite.TFLiteConverter.from_keras_model_file('sunroof_model.h5')

tflite_model = converter.convert()

open("sunroof_model.tflite", "wb").write(tflite_model)

Below are few errors have experienced while developing android code

"Attempt to invoke virtual method 'void org.tensorflow.lite.Interpreter.run(java.lang.Object, java.lang.Object)' on a null object reference"

Cannot copy between a TensorFlowLite tensor with shape [1, 1] and a Java object with shape [1].

Cannot copy between a TensorFlowLite tensor with shape [1, 6] and a Java object with shape [6].

Friday, March 22, 2019

Thursday, February 28, 2019

Success formula in our life

- Insatiable appetite for knowledge

- Know the basics

- Identify the gaps in your knowledge

- Constantly update

- Expose the linkages

- Expose diverse views

- Write, argue and debate

Reference: https://www.youtube.com/watch?v=_svETMOY9zY

Monday, February 4, 2019

Hyper parameters with GridSearch

Building a better model involves iteration and tuning these hyperparameters.

Grid search uses cross validation, splitting the training data up into folds for training and testing of each hyperparameter combination. After all hyperparameter variants are trained, the original test data is used to validate the final model.

Grid search uses cross validation, splitting the training data up into folds for training and testing of each hyperparameter combination. After all hyperparameter variants are trained, the original test data is used to validate the final model.

GridSearchCV

takes a dictionary that describes the parameters that should be tried and a

model to train. The grid of parameters is defined as a dictionary, where the

keys are the parameters and the values are the settings to be tested.

from sklearn.model_selection import GridSearchCV from sklearn.metrics import classification_report from keras.wrappers.scikit_learn import KerasClassifier from keras.models import Sequential import time

def dense_model(units, dropout):

model = Sequential()

model.add(Dense(units, activation='relu', input_shape=(28, 28,)))

model.add(Dropout(dropout))

model.add(Dense(units, activation='relu'))

model.add(Dropout(dropout))

model.add(Flatten())

model.add(Dense(10, activation='softmax'))

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

return model

hyperparameters = {

'epochs': [1],

'batch_size': [64],

'units': [32, 64, 128],

'dropout': [0.1, 0.2, 0.4]

}

model = KerasClassifier(build_fn=dense_model, verbose=0)

start = time.clock()

grid = GridSearchCV(estimator=model, param_grid=hyperparameters, cv=6, verbose=4)

grid_result = grid.fit(x_train, y_train)

print("Best: %f using %s" % (grid_result.best_score_, grid_result.best_params_))

y_true, y_pred = np.argmax(y_test, axis=1), grid.predict(x_test)

print()

print(classification_report(y_true, y_pred))

print()

print(time.clock() - start)

Saturday, February 2, 2019

How to get current available GPUs in tensorflow?

Working with a GPU can be 20-50x faster!

Reference Link:

https://stackoverflow.com/questions/38559755/how-to-get-current-available-gpus-in-tensorflow

Reference Link:

https://stackoverflow.com/questions/38559755/how-to-get-current-available-gpus-in-tensorflow

Wednesday, January 16, 2019

Subscribe to:

Posts (Atom)